Testability Guideline

Facing challenges during Test Automation can be caused by issues in the Test Design. However, challenges can also indicate flaws in the architecture of the System Under Test (SUT). If the architecture is hard to configure and analyze, testing will be likewise.

Thus, problems with testing are the results of the test:

"If automated checks are difficult to develop and maintain, does that say something about the skill of the tester, the quality of the automation interfaces, or the scope of checks? Or about something else?" (Michael Bolton, 2011).

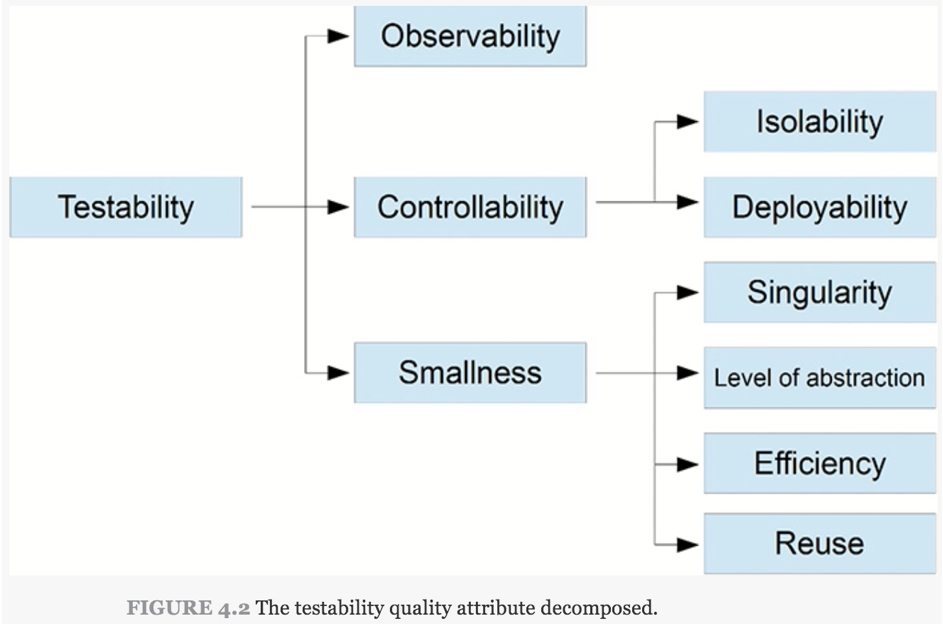

If you want to focus on the testability of your system, consider the following quality attributes:

Testability Requirements such as Observability and Smallness are also key aspects for microservices and Continuous Delivery and they support well-known modern engineering practices. If you practice Continuous Delivery, you should work on small increments which boosts the testability. Also, use static code analysis tools to determine the cyclomatic complexity and other metrics at an early stage in your deployment pipeline.

Let’s look at some quality attributes, referring to the book “Developer Testing: Building Quality into Software” by Alexander Tarlinder:

Observability

Is it possible to write a test for a specific feature/requirement? Observe the system under test in order to verify its functionality. Observability is the ability to monitor what the software is doing, has done and the resulting states.

Note that observability also includes logging. For example, performance tests may require that the application logs specific response times. For those log entries, a standard format should be used.

Controllability

Controllability is about setting the system under test in a state expected by the test.

"Along with observability, control is probably the key area for testability, particularly so if wanting to implement any automation. Being able to cleanly control the functionality to the extent of being able to manipulate the state changes that can occur within the system in a deterministic way is hugely valuable to any testing efforts and a cornerstone of successful automation." Adam Knight (2012).

If you work on a new feature, being able to control the state such that you can test it is crucial. Setting the state could for example also be a part of the Definition of Done (DoD).

Isolateability and decomposability

This describes the degree to which the SUT can be tested in isolation (i.e. are software and data dependencies mockable).

Note that to be able to (unit) test the SUT in isolation is one of the F.I.R.S.T. test principles (http://agileinaflash.blogspot.de/2009/02/first.html).

Deterministic and repeatable deployment

The deployment of the SUT is automated and deterministic so that tests are repeatable and can be automated. Note that this is also a key criteria for Continuous Delivery.

Separation of concerns

Are small changes in the code causing problems which are disproportionately large or hard to find? Separation of concerns is the degree to which the SUT has a single, well defined responsibility. Different components shall communicate via APIs.

In particular, microservices should be designed and shaped such that they fulfill the following key aspects:

- They are responsible for one functionality.

- They are truly loosely coupled since each micro service is physically separated from others (i.e. running in its own container).

- Loosely coupled implies also that micro services shall communicate via APIs and shall not share data.

- Dependencies that are required to provide the required functionality shall be embedded.

- Each micro service can be built by any set of tools or languages since each is independent of others.

Thus, separation of concerns makes it easier to test the SUT in isolation.

The separation of concerns is addressed by architecture principles such as:

- Interface Segregation design principle

- Open Closed design principle

- Postel’s Law

Note that Test Driven Development (TDD) can help to ensure the testability and related test findings can be an impulse to improve the architecture. However, it should be also noted that there is the risk of “test-induced design damages”. Overusing testability design changes may lead to design damage flows:

"Such damage is defined as changes to your code that either facilitates a) easier test-first, b) speedy tests, or c) unit tests, but does so by harming the clarity of the code through — usually through needless indirection and conceptual overhead." (David Heinemeier Hansson, Apr 29, 2014).

This should be taken into consideration but with common design principles such as Interface Segregation, the Open Closed, and the Robustness principles, your software should support testability while avoiding the introduction of design damages.

See also

- Alexander Tarlinder (2016): Developer Testing: Building Quality into Software.

- David Heinemeier Hansson (Apr 29, 2014): Test-induced design damage. http://david.heinemeierhansson.com/2014/test-induced-design-damage.html

- Jose Luis Ordiales Coscia (Apr 5, 2017): Why you should follow the Robustness Principle in your APIs - https://engineering.klarna.com/why-you-should-follow-the-robustness-principle-in-your-apis-b77bd9393e4b

- Michael Bolton (2011): Testing Problems are Test Results - https://www.developsense.com/blog/2011/09/testing-problems-are-test-results/

- Robert C. Martin (2008): Clean Code.